Key LLM Concepts

This lesson preview is part of the The Basics of Prompt Engineering course and can be unlocked immediately with a \newline Pro subscription or a single-time purchase. Already have access to this course? Log in here.

Get unlimited access to The Basics of Prompt Engineering, plus 90+ \newline books, guides and courses with the \newline Pro subscription.

[00:00 - 00:09] So we're going to look at a couple of very high level concepts of how LLM's work. I'm sure most people have probably heard about tokenization or even have an idea of what it is.

[00:10 - 00:23] Tokenization is the transformation of text. That's the string of words that you put into your prompts, being transformed into tokens, which are the minimum processable units in an LLM.

[00:24 - 00:32] As far as a user, from a user perspective, an LLM is a black box with a lot of mathematics and statistics inside it. You enter a string.

[00:33 - 00:35] The string goes into the black box. And then another string comes out.

[00:36 - 00:45] In most use cases, obviously there's image generators and such. So tokens, both in the input and the output, are the minimum processable units.

[00:46 - 00:53] They're generally turned into vectors. And these are used to calculate distance from other tokens, or I think cosine distance is used.

[00:54 - 01:02] But again, I don't want to delve into the maths too much. But this is basically used to make a syntactic connotations between different tokens.

[01:03 - 01:19] So if you're just doing a purely semantics-based natural language processing, the word dog, for example, might be close to the word frog, just because they share a lot of letters. But it wouldn't be close to the word rottweiler.

[01:20 - 01:38] So what a lot of modern LLM's do is have these transformers so that the meaning of the word is somewhat embedded. And you have that similarity, but lower cosine distance between two words that are more synonymous, even if the words don't resemble each other.

[01:39 - 02:05] It's also worth noting that the usage cost for any LLM tool is usually measured in tokens. If you look, for example, at, say, chat GPT or AMP or something like that, or whatever, if you look at their non-free tiers, they usually charge a certain plan that has X number of input tokens and Y number of output tokens, usually in the millions, usually starting from 1 million tokens.

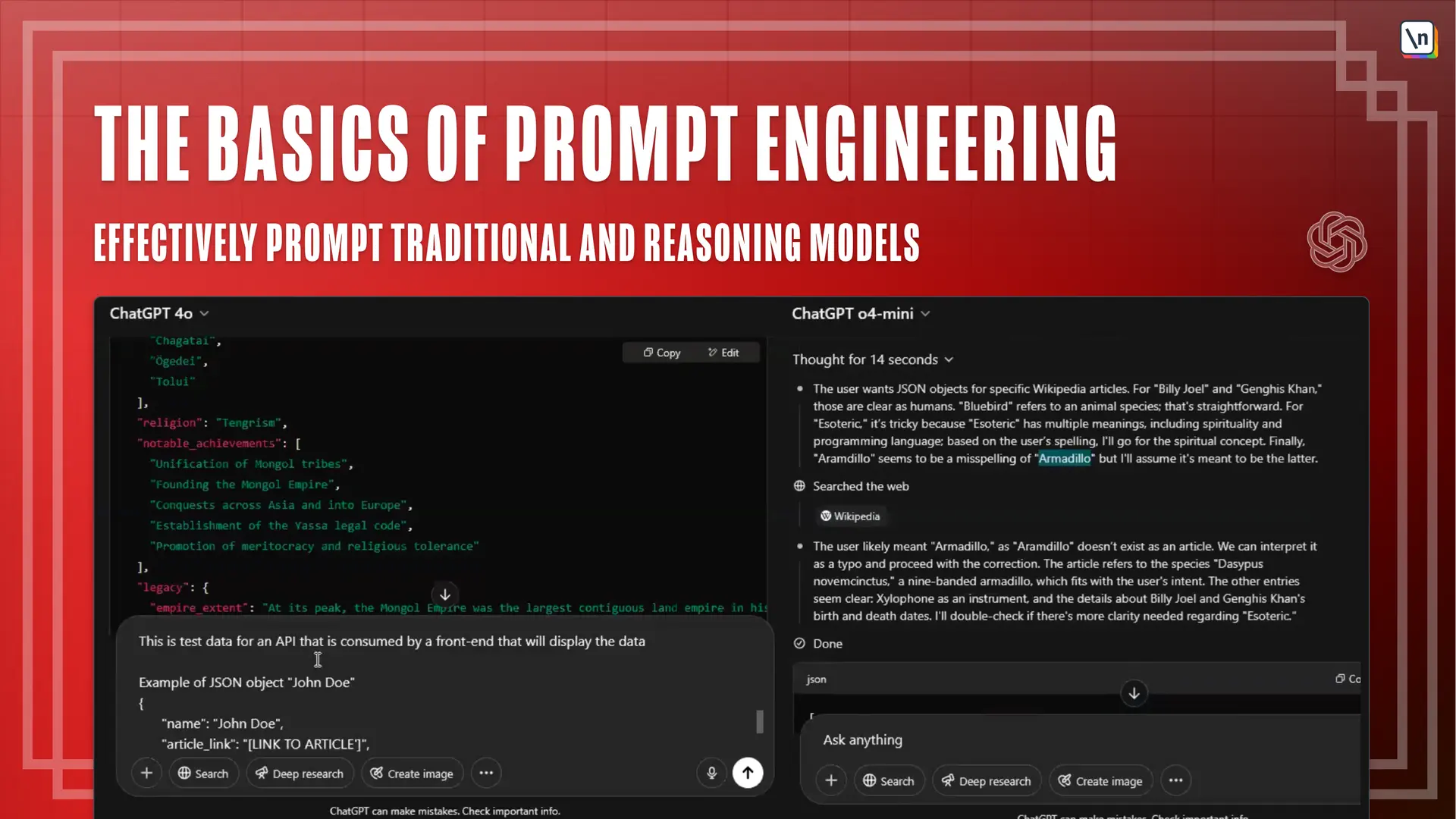

[02:06 - 02:15] And these prices are different for traditional versus reasoning models. Here, this is just a screenshot of a tokenizer tool.

[02:16 - 02:22] It literally just shows you the input prompt, the string. Here, it's specifically for GPT 4.0 and GPT 4.0 many.

[02:23 - 02:29] And how that gets turned into tokens at the bottom of the screen. So you can see each word here and how many miles to babylon.

[02:30 - 02:37] This is a book I studied in secondary school. You can see that each word here is being turned into a token.

[02:38 - 02:47] I'll helpfully code it. Most mainline LLM models have their own tokenizer tool but that's available just for free in a web UI.

[02:48 - 03:00] If you search the name of the LLM model followed by tokenizer, you'll usually get a UI like this. That kind of shows you how strings are tokenized in your prompts.

[03:01 - 03:13] The next concept we need to know about is complex windows and output token limits. Complex windows basically just refer to the number of tokens an LLM can take.

[03:14 - 03:22] So the complex windows, the number of tokens an LLM can take as an input. In most cases, you don't need to worry about this complex window.

[03:23 - 03:36] It's usually quite large often in the thousands or more. If you're, for example, trying to put your entire code base in as context, if you're doing something with code, then it might be a bit much.

[03:37 - 03:49] Or if you're, for example, putting in the entirety of War and Peace by Leo Tolstoy, as part of your prompts, that can probably run afoul of a lot of complex windows as well. But in most cases, you don't really need to worry about this.

[03:50 - 03:58] It's just something to be aware of. The output token limit is effectively the same concept but for your output.

[03:59 - 04:09] Normally, at least with basic everyday prompts, the output is going to be longer than the input because a lot of prompts are going to be one or two sentences. The outputs tend to be longer.

[04:10 - 04:27] I'm sure if you guys have used LLMs, you've probably noticed that they, unless you tell them otherwise, however, prevents you to be a little bit verbose, much like myself. But yeah, so it's worth knowing that there's a limit to the input, there's a limit to the output, it's measured in tokens.

[04:28 - 04:37] We're not really going to delve into anything deeper than that. We're not going to talk about RAG or fine tuning anything but because this is going to be a beginner-friendly tutorial.